7 Alarming Trends Reshaping Cybersecurity

Highlights

- AI-driven ransomware now delivers hyper-personalized, human-like phishing at massive scale.

- Criminals use generative tools to automate reconnaissance, craft deepfakes, and adapt attacks in real time.

- Traditional security tools fail because AI-enabled malware mutates and evolves faster than legacy defenses.

- SMEs, hospitals, schools, and supply-chain vendors are becoming prime ransomware targets due to lower protection.

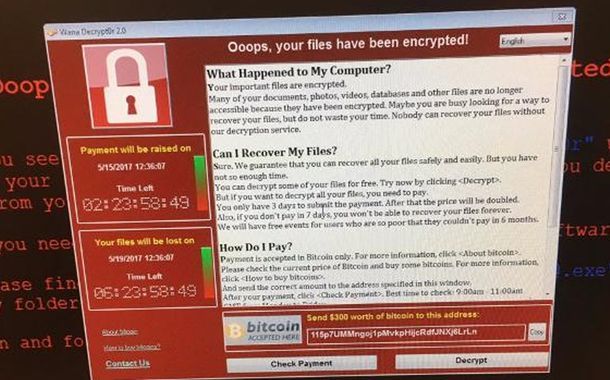

AI ransomware in November 2025, a mid-sized logistics company in Southeast Asia watched its entire operations grind to a halt. Delivery schedules froze. Payments failed to process. Employees were locked out of devices displaying a blunt message: “Your data has been encrypted.”

But what struck the company’s cybersecurity team wasn’t the attack itself, ransomware has long been a digital threat, but how eerily tailored the intrusion was. The phishing email mimicked the CEO’s writing style, referenced an internal meeting, and used personal details that a human attacker would need hours of reconnaissance to know. Yet the hackers didn’t spend hours. An AI system did.

This is the new face of ransomware: fast, adaptive, and increasingly powered by generative intelligence. Industry estimates suggest that as many as 80% of global cyberattacks now involve AI assistance, not because criminals suddenly became smarter, but because AI tools have become powerful, cheap, and easy to misuse.

When Criminals Get a Hold of Generative Tools

Traditional ransomware attacks required skills like coding, social engineeringand the patience to study a target. The learning curve was a natural barrier.

But generative AI has erased much of that barrier. It is capable of:

- Producing convincing phishing emails in seconds

- Imitating writing styles based on samples scraped online

- Generating malicious payloads or scripts (which criminals may repurpose)

- Analyzing organizational structures from public data

- Automating conversations to manipulate victims

- Rapidly adapting tactics when defenses are detected

To be clear, AI models themselves do not intend harm. Most are built for beneficial uses: summarising text, writing code, or answering questions. But cybercriminals have learned to exploit them indirectly by feeding them stolen information, using jailbroken interfaces, or relying on underground AI models explicitly trained to bypass rules.

How Phishing has been reinvented

Phishing used to be easy to spot. Bad grammar. Wrong names. Suspicious urgency. In 2025, those stereotypes are gone. AI-generated phishing messages often read like a coworker wrote them. They reference actual industry events. They use the right jargon. Some even imitate a manager’s tone so well that employees don’t think twice before clicking. This is what has changed so far:

- Personalization: Criminals feed public data like LinkedIn posts, customer reviews, public filings into AI models. The result is that the messages feel intimately relevant.

- Large Scale Automation: Instead of targeting 10 people, attackers can target 10,000, each email unique, each tailored.

- Voice and video deepfakes: Sophisticated groups now use AI-generated voice messages or video prompts to impersonate executives, approving transfers or asking employees to open “urgent” files. As a result, phishing detection tools built to flag suspicious language often fail. The emails are simply too human.

AI-Automated Attacks

Cyberattacks used to be linear: identify a vulnerability, exploit it, and hope it works.

AI-assisted attacks are different. They are iterative. Criminals deploy automated agents that:

- Scan for vulnerabilities

- Attempt multiple pathways simultaneously

- Log which defenses triggered alerts

- Adjust strategies in real time

This allows attackers to probe like a seasoned penetration tester — except they do it at machine speed. Some AI systems even simulate multiple attack scenarios, selecting the most promising before launching. For defenders, it’s like trying to block a shapeshifting adversary that learns with every move. “It’s not just more attacks, it’s smarter attacks, faster attacks, and relentless attacks,” notes a European CERT (Computer Emergency Response Team) specialist.

Why Traditional Defenses are Struggling

Many organizations still rely on security stacks built for a world of predictable threats: firewalls, signature-based antivirus, rule-based email filters.

But AI-driven ransomware doesn’t follow predictable patterns. The problem isn’t visibility, it’s adaptability.

- Email filters fail because phishing emails don’t resemble past attacks.

- Antivirus tools struggle because malicious scripts mutate automatically.

- Humans can’t distinguish real messages from AI-crafted ones.

- SOC (Security Operations Center) teams are overwhelmed by alert volume.

It’s like playing chess against a computer that learns from every game you’ve ever played.

The Human Element

Perhaps the most unsettling part of AI-driven ransomware is not the technology, but its understanding of human emotions. Using behavioral models, AI systems craft messages that exploit stress, urgency, or fear. They mimic interpersonal dynamics. They politely mirror the communication patterns of colleagues. Some attacks exploit moments of vulnerability:

- Months where a company is restructuring

- Holidays, when staff are thin

- Global crises or breaking news

- Local events, like elections or storms

Humans remain the most reliable entry point for attackers, and AI is making that entry point easier to exploit.

Who’s Being Targeted?

AI-powered ransomware has expanded beyond the traditional big-tech or financial targets:

- Small and Medium Enterprises (SMEs): AI lowers the cost of attack, making smaller businesses just as attractive as large ones.

- Healthcare Systems: Hospitals often have older IT systems and cannot afford extended downtime.

- Educational Institutions: Universities hold sensitive data and have distributed networks.

- Municipal Governments: Local bodies often lack cybersecurity budgets and experience.

- Supply-Chain Vendors: Attackers use small vendors as footholds to reach major corporations.

Weaponization of Progressive Technology

Perhaps the most painful irony is that AI’s breakthroughs, like creativity, automation, linguistic fluency, were meant to expand human potential. Instead, they’ve also expanded criminal potential.

This dual-use dilemma isn’t new in technology. But AI magnifies it.

Tools designed for harmless tasks like code generation or email drafting become harmful when misused.

The challenge facing policymakers and tech companies is profound:

How do you regulate something that is both a productivity engine and a potential weapon?

Stronger guardrails, watermarking, and model restrictions are being rolled out globally. But the cybercriminal ecosystem evolves quickly, finding loopholes as soon as they appear.

Conclusion

The rise of AI-driven ransomware is not just a technological story, it’s a human one. It affects small businesses struggling to stay afloat, hospitals trying to protect patients, and everyday workers navigating increasingly deceptive digital communications.

Artificial intelligence did not create the impulse for cybercrime, but it has undeniably changed its scale and sophistication. With an estimated 80% of attacks now using AI, the threat landscape is evolving faster than many organizations can respond.

Still, this is not a hopeless battle.

As governments strengthen regulations, companies adopt AI-driven defence tools, and employees become better trained, the balance can shift again.

The next chapter of cybersecurity will not be written by attackers alone, but by the global effort to ensure that AI remains a tool for progress, not exploitation.

Comments are closed.