5 Game-Changing Breakthroughs Boosting AI Speed

Highlights

- Google deploys Gemini 3 Flash as the default model across Search AI Mode and the Gemini app globally.

- The new model delivers faster responses with improved reasoning for complex, multi-part queries.

- U.S. users gain access to Gemini 3 Pro and advanced visual AI tools within Search AI Mode.

- Developers benefit from higher performance-per-cost AI via Gemini API, AI Studio, and Android Studio.

In a significant expansion of its artificial intelligence offerings, Google has begun rolling out Gemini 3 Flasha new, high-performance AI model that combines advanced reasoning with rapid response times across Google Search’s AI Mode and the Gemini app globally.

The announcement marks a strategic enhancement of the company’s AI capabilities, designed to deliver faster, more contextual results while broadening access to cutting-edge model intelligence for both everyday users and developers.

Accelerating Search Intelligence

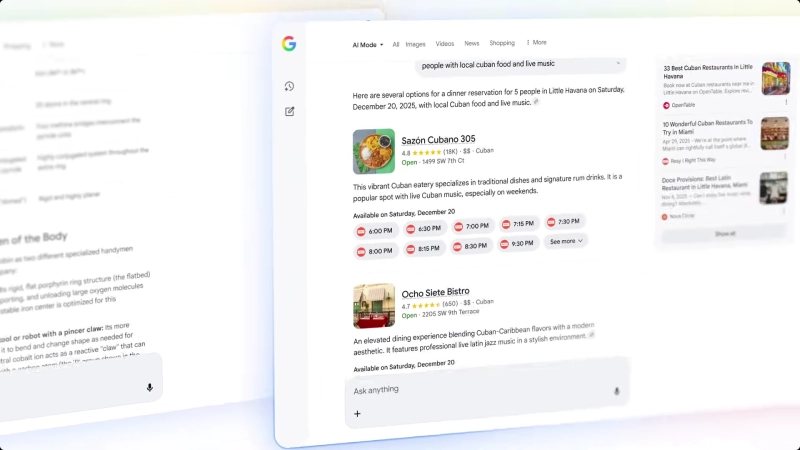

At the heart of Google’s update is the deployment of Gemini 3 Flash as the default model for Search’s AI Mode. This feature provides conversational, AI-generated answers to complex queries. Previously powered by earlier flash models, AI Mode now leverages Gemini 3 Flash’s strengthened reasoning engine to tackle multi-part questions with improved comprehension and precision while maintaining the quick turnaround expected from Search.

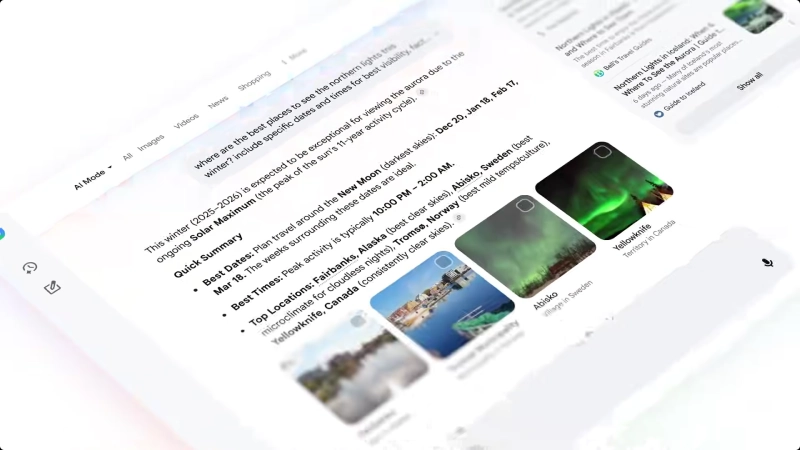

According to Google’s product team, this rollout is designed to make sophisticated AI answers more widely available. Gemini 3 Flash’s integration into Search means users around the world will now receive results built on a model that blends frontier reasoning with low latency, enabling rapid responses to nuanced requests that may involve interpreting text, generating data summaries, and providing context-heavy explanations, all within the interactive experience of AI Mode.

In addition to the flash model’s speed and efficiency, Google is also expanding access to higher-capability models in Search for users in the United States. Those selecting “Thinking with 3 Pro” in AI Mode can access Gemini 3 Pro, which offers deeper analytical support and dynamic visual layouts with interactive tools and simulations generated on the fly.

Google is additionally broadening the use of Nano Banana Pro, a state-of-the-art image generation and editing model, giving users more creative and visual exploration options.

Gemini App Gets a Performance Boost

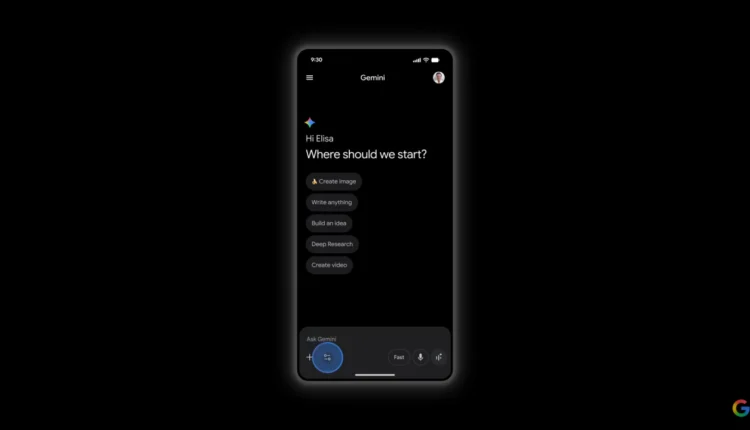

On the consumer front, the Gemini app is also receiving Gemini 3 Flash, giving millions of users a faster and more capable assistant experience. Google has introduced two distinct usage modes within the app: “Fast” for quick, concise responses and “Thinking” for tackling more complex requests. The underlying model remains Gemini 3 Flash by default, replacing the older Gemini 2.5 Flash version and offering users a significant upgrade in responsiveness and analytical depth without additional cost.

Notably, the rollout retains user choice: while Gemini 3 Flash serves as the default, users can still select Gemini 3 Pro for tasks requiring advanced math, in-depth code support, or high-precision reasoning. This tiered model selection supports a broader spectrum of needs, from everyday informational queries to professional-level problem-solving.

Implications for Developers and the Broader AI Ecosystem

Beyond consumer applications, Gemini 3 Flash represents a noteworthy development for developers building with Google’s AI infrastructure. The model is now accessible via Google AI Studio, the Gemini API, Android Studio, and tools such as the Gemini CLI. Early indicators suggest it offers substantially higher performance per cost unit than previous Flash and Pro versions, reportedly operating up to 3 times faster than the older Gemini 2.5 Pro while costing a fraction of the price for token-based API usage.

For enterprise adopters, enhanced multimodal processing and rapid response times open up new possibilities for real-time workflows, advanced data extraction, and automated logic chains that would have been prohibitively slow or costly with earlier models. Use cases range from interactive gaming logic and agentic application development to deepfake detection and large-scale document analysis.

Conclusion

Google’s introduction of Gemini 3 Flash also illustrates a broader competitive posture within the generative AI landscape, where companies are racing to deliver models that balance speed, accuracy, and cost. By embedding this model across core products like Search and the Gemini app, Google is pushing its AI capabilities directly into widely used consumer and developer environments, amplifying both accessibility and real-world utility.

In sum, Gemini 3 Flash’s global rollout represents a significant step in Google’s AI evolution: marrying advanced multimodal reasoning with the immediacy users expect from search and app interactions, while also equipping developers and enterprises with tools that support rapid innovation at scale.

As AI becomes increasingly integrated into everyday tasks, this update showcases Google’s intent to shape how users interact with and benefit from intelligent systems.

Comments are closed.